Event Streaming

Built on Apache Kafka®, easily collect event data from anywhere, add rich attributes, and send to wherever you need.

Get Started

Stream from any source

Use Keen’s powerful REST API and SDKs to collect event data from anything connected to the internet: your websites, apps, backend servers, smart devices, third party systems, etc.

- POST from anywhere in your stack with over 15 SDKs from JavaScript to Go.

- Batch upload historical data from your production systems.

- Easily collect data using streams, webhooks, or even AMP-Analytics.

- Utilize our Data Modeling Guide that allows you to make your analytics more powerful and accessible, while also helping you avoid common beginner mistakes (and will save you time too!)

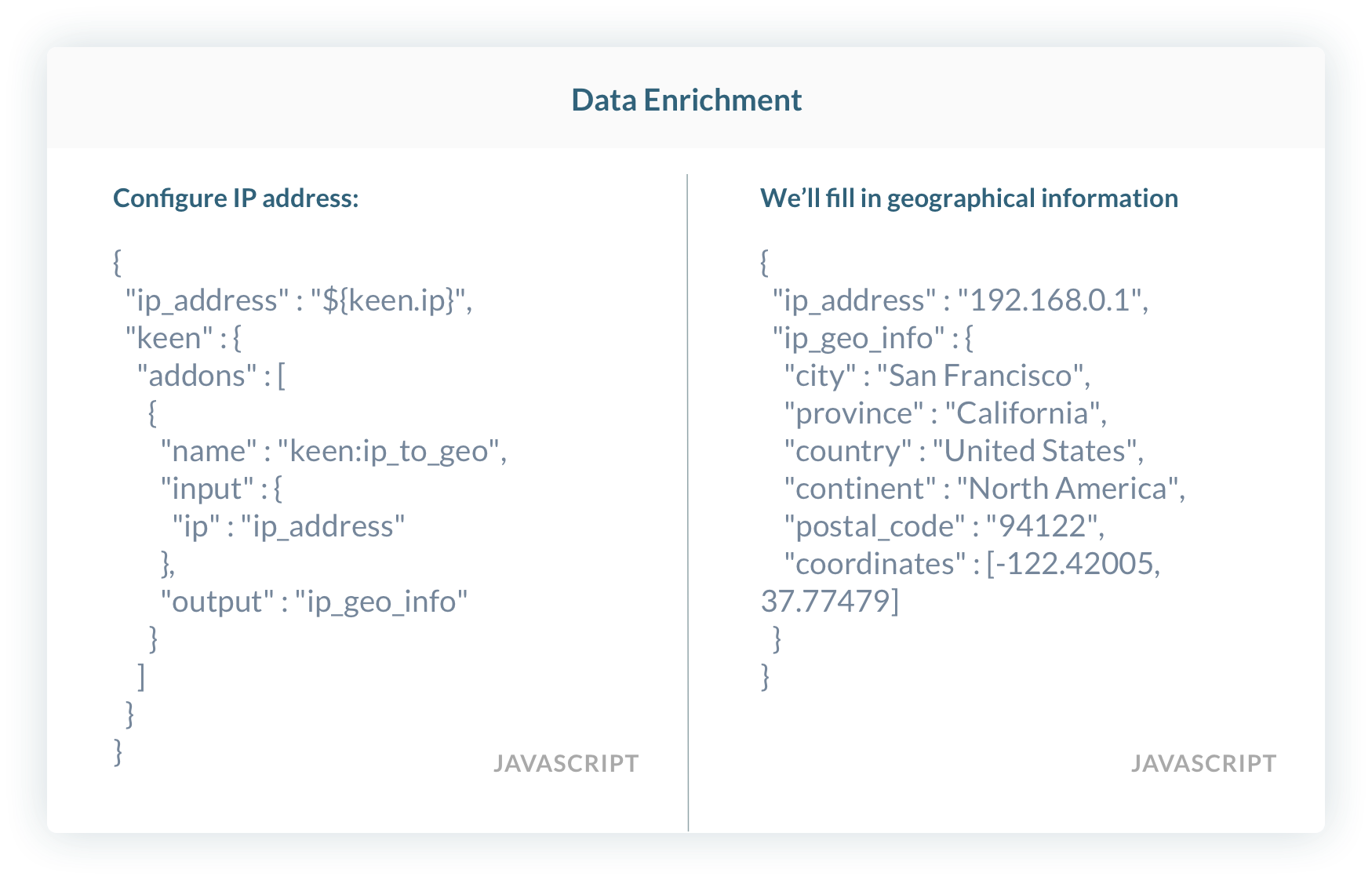

Enrich & transform

Add additional details to the billions of events you capture.

- Use enrichments to add dimensions like location, weekday, access device, and more.

- By utilizing custom Access Keys, use transformations to add entity data from custom data sources.

- If you’re looking to collect events on the web, utilize Keen’s Auto-Collector to automatically collect events with data-rich properties.

Send data wherever you need it

Your data is one of your most valuable assets. We’ll never force you into a data silo.

- Safely store data with Keen for instant querying and building real-time data products with Keen Compute.

- Stream enriched data to Amazon S3 and harness the power of your data anywhere.

- Connect to any data store or application with our API.

Fully managed event data pipeline

A customizable data pipeline without the overhead of a DIY solution.

Get up and running quickly with our comprehensive set of tools and SDKs. You can be collecting data quickly in no time.

Put Keen’s people on pager duty instead of yours. Our global team of developers and support staff ensure that the platform is running 24/7, 365.

Build exactly what you need with a fully customizable stack. You have total control over what data is collected and how it’s described.

Decrease your operational and delivery risk. Avoid the inevitable headaches that come with maintaining a fault-tolerant data pipeline.

“The biggest thing we were looking for was flexibility—the ability to collect data from any interaction on any media property. We needed control over how we were collecting and analyzing our data.”