Event Streaming and Analytics:

Everything a Dev Needs to Know

LET’S GET STARTED

Are you new to event streaming and analytics infrastructure or evaluating potential solutions? Our Everything a Dev Needs to Know guide takes you through all of the key factors to consider from implementation to maintenance of scaleable, highly available data infrastructure.

Introduction

Right now, data doubles every two years. By 2025, the amount of data in the world will double every 12 hours! The prominence of B2B SaaS (growing 30% per year) and IoT connected devices (expected to double in number over the next four years) contribute significantly to the unprecedented generation of event data.

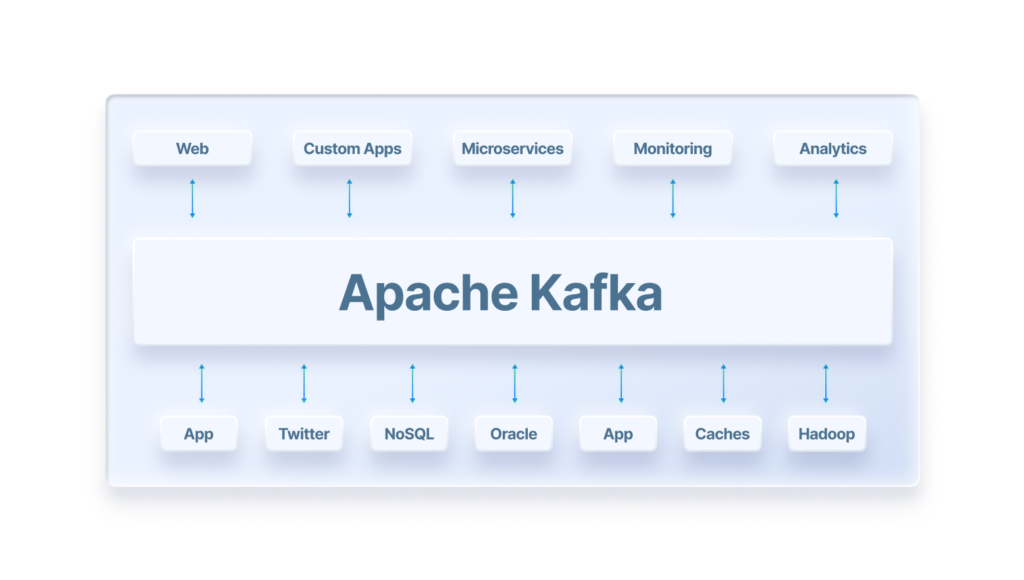

Winning companies have created big data infrastructures to capture and leverage the massive volumes of event data they generate to monetize their platforms and deliver personalized product experiences in real time. In fact, Apache Kafka, an open-source distributed event streaming platform, is used by an estimated 80% of all Fortune 100 companies. Kafka helps businesses scale cloud server resources in data center operations with improved user traffic metrics, network security protection, and real-time platform analytics.

Leveling the playing field so that small-to-midsize businesses (SMBs) can compete with the giants requires implementing event streaming and analytics at a big data scale. Robust event streaming infrastructure enables SMBs to make decisions informed by data as well as create disruptive, event-driven products and applications. For example, Chargify, the leader in billing for B2B SaaS, recently launched an event-driven product, Events-Based Billing, which allows SaaS companies to create a billing model based on real-time, custom metrics they define. Suppose a team wants to charge based on granular metrics like number of API requests, API request complexity (such as number of attributes queried), or phone call duration in minutes. In these cases, Events-Based Billing provides the data streaming and analytics functionality to do just that—no more end-of-the-day batch or rigid units like in legacy metered billing models.

Chargify wanted to get to market quickly so they acquired Keen, an all-in-one event streaming platform, to accelerate the development of their disruptive, event-driven products. Keen offers pre-configured, fully-managed data infrastructure with data streaming and analytics capability—all accessible via an API for faster development.

Using a complete event streaming platform is a popular method of building an event-driven product or application. In addition to the all-in-one solution, there are a couple of other high-level implementation options worth considering: managed Kafka and building in-house. The decision between these three implementation options typically comes down to tradeoff between cost, resources, time, and performance requirements. More on that later!

In this guide, we will:

- Introduce technologies for implementing event streaming architecture

- Review everyday event streaming use cases for SMB and enterprises

- Discuss the functional differences in the implementation of event streaming solutions and their trade-offs

- Spotlight the importance that storage and analytics plays in an event streaming solution

- Present solutions to consider for complete event streaming platforms, managed Kafka, and DIY

Overview: Event Streaming Architecture & Cloud Platforms

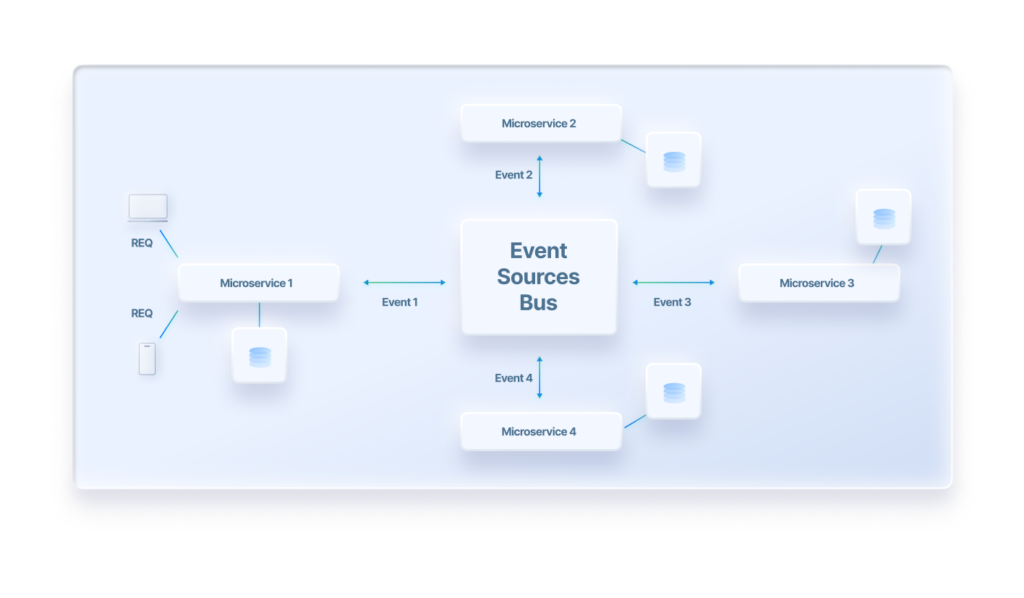

Apache Kafka is an open-source event streaming platform used in software development that implements pub/sub messaging on distributed cloud architecture. Pub/sub, a messaging design pattern and sibling of messaging queues, implements publishers and subscribers as classes without knowledge of each other. Pub/sub allows for increased scalability and enhances network topology dynamics to support event streaming at an enterprise scale. Programmers originally developed Kafka at LinkedIn to support the infrastructure demands of their social networking community’s requirements with 100’s of millions of active users. LinkedIn modeled the original Kafka event streaming architecture on functionality used by RabbitMQ, Hadoop, & ETL tools. Pub/sub messaging is trusted for asynchronous broadcasting.

Apache Kafka’s release in 2011 led to a change in enterprise IT architecture to favor continual data streams with real-time analytics. The platform allows developers to deploy distributed architecture in data centers to support code that runs in elastic clusters. Apache Kafka storage is persistent and designed to solve the production issues faced by high traffic websites and mobile applications. Event streaming architecture provides optimal support for the dynamic content requirements of the world’s most popular eCommerce, cloud software, and social networking websites. It is also used in high-performance computing (HPC) research.

Apache Kafka includes five core APIs favored by programmers to build custom software and data center solutions for use at an enterprise scale. Solutions providers like CloudPipes and QuickBase integrate with Kafka-based platforms, like Stream, to connect with thousands of third-party API solutions through SDK tools. Developers can more efficiently support microservice project requirements on event streaming architecture by using internal and external APIs to prepare data for display on dynamic applications that are custom-built for B2B SaaS and IoT requirements.

Historically considered the gold standard, Apache Kafka serves as the foundation for many popular event streaming solutions like Stream, Confluent, and IBM Event Streams. Cloud Service Providers also released a suite of products to build custom data infrastructure in the years following Apache Kafka’s release—Amazon Kinesis in 2013, Azure Event Hubs in 2014, and GCP Dataflow in 2015. Digging into the full suite of tools the Cloud Service Providers offers is outside the scope of this guide, but we did write a neat article on why we built Keen on Apache Kafka rather than Amazon Kinesis.

Event Streaming Architecture: SMB and Enterprise Use-Case Scenarios

Enterprise corporations and SMBs choose to build or buy high performance distributed systems for addressing hard-to-solve problems in custom software development requirements. Common use cases include implementing real-time monitoring, automating network security alerts, and building traffic analytics for websites/mobile apps. Developers leverage Apache Kafka by creating and integrating cross-platform APIs for distributed data, storage, and processing systems.

Event streaming architecture has evolved for use in a wide variety of industry sectors. These are some of the most common uses of Apache Kafka in enterprise & SMB groups:

- Banking and financial sectors rely heavily on Apache Kafka to process payments and record financial transactions for ecommerce, stock exchanges, and credit card gateways.

- Major brands and shipping companies use Apache Kafka for logistics with real-time tracking and analytics across the supply chain with integrated CRM support.

- The largest corporations in the petroleum industry use Apache Kafka to gather and process measurements from remote sensors in oil and gas extraction facilities.

- Apache Kafka is used widely in ecommerce operations for real-time fraud detection with user identity verification and IP address blacklisting for network resource protection.

- Major healthcare providers worldwide use Apache Kafka to support IoT devices which monitor environmental conditions for the treatment of millions of hospital patients.

- Apache Kafka has been adopted widely across the hotel and travel industry for reservation systems where real-time data is used to track transportation and lodging.

- New innovation with Apache Kafka incorporates advances in artificial intelligence, machine learning, and data science, leading to new software features and products.

- Event streaming architecture provides data analytics in complex business organizations that internal teams can use to build better sales and marketing campaigns.

Event streaming solutions have led to many new innovative software products on the basis of introducing data science and platform analytics. Streaming architecture is now being used in product/content recommendations with Digital Experience Platforms (DXPs) for value-added content in customer-centric approaches to eCommerce, social networking, and media publishing.

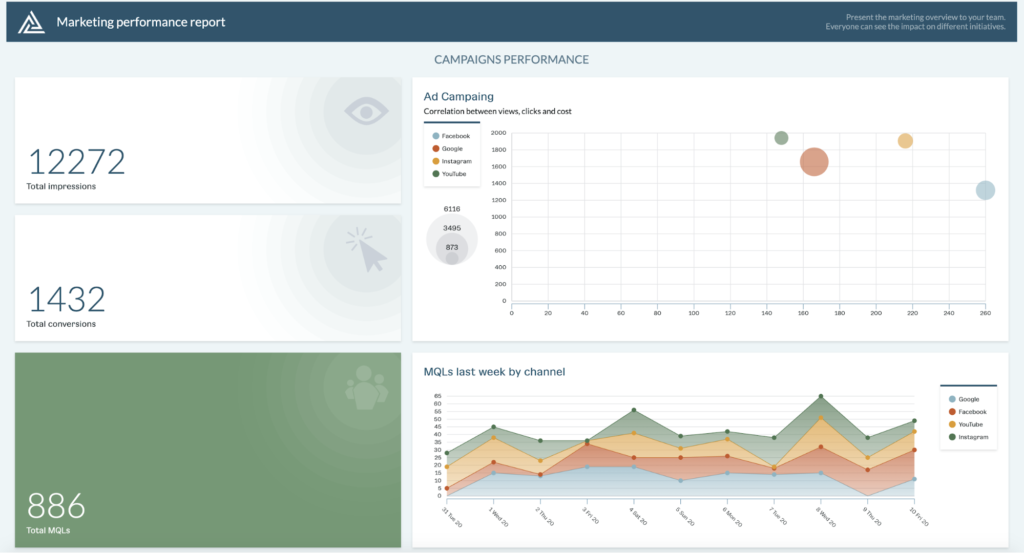

Besides providing these raw streaming capabilities out-of-box, Keen also includes nice-to-have features such as our data analytics tools for fine-grained, real-time analytics on products, services, and content. Developers can save time implementing data analytics by using our Compute API to run queries and our Data Viz library to create embeddable dashboards. This helps Agile software development teams bring new websites and mobile applications to market more quickly and introduce new features to applications in production more regularly.

Implementing an Event Streaming Solution

There are a few common ways a business can approach implementing event streaming architecture:

- Subscribing to a complete event streaming platform with all-in-one functionality including streaming, persistent storage, and real-time analytics.

- Purchasing a managed Kafka solution and extending it by building storage and analytics functionality.

- Building and managing in-house event streaming architecture using open-source or cloud suite software.

We’ll be comparing these solutions in more detail later. First, it’s essential to clearly define a couple of implementation concepts to put sufficient context around these three approaches: managed vs. DIY and multi-tenant vs. dedicated event streaming infrastructure.

Managed vs. Do-It-Yourself (DIY)

Making a choice between managed cloud and DIY event streaming solutions will depend on an audit of IT resources. (Infosec created a handy walkthrough for a comprehensive IT audit to help you get started.) A few high-level implementation questions to consider when evaluating solutions for an event streaming infrastructure are:

- Has your business implemented distributed architecture in various degrees through hybrid and multi-cloud networking under SDN/SDDC standards?

Multi-cloud solutions allow IT departments to build support for a wide range of microservices under a unified corporate security policy and to choose “best-in-class” products from SaaS vendors for productivity. PaaS solutions assist Agile programming teams for custom software development. Managed Apache Kafka platforms operate similarly under the PaaS or IaaS architectural model. - Do you already have an on-premise data center or web server facilities?

If so, implementing a DIY approach may be the best fit due to the ability to create custom configurations that match your systems. Developers can implement DIY processes to Apache Kafka event streaming architecture in a wide range of public and private cloud hardware methods. - Do you have the IT resources to manage an event streaming service layer to support apps and services?

IT departments have various degrees of difficulty managing their event streaming service layer in support of apps and services. Many enterprise corporations have already committed to event messaging systems as their data-driven operations’ central nervous system. Digital-native and cloud-native standards help organizations adopt data-driven methodologies across verticals and eliminate data silos. IT teams should determine the ideal solution based on their bandwidth.

After performing an audit of your IT resources, the next step in evaluating managed and DIY event streaming solutions is to set a timeline and allocate a budget for implementing an event streaming solution. Finally, consider any special requirements that are critical to your application such as performance, security, etc.

Managed Cloud solutions are ideal for businesses with smaller IT teams who don’t have the time or resources to deploy and maintain custom event streaming infrastructure. Instead, they can pay for a monthly subscription (subscription plans at Keen start at $149 per month) to have experts deploy, scale, log, and monitor their infrastructure. Managed event streaming platforms also offer APIs and SDKs for streaming and analytics which allow development teams to get up and running in weeks. DIY approaches, on the other hand, can take months or even years to implement, but they also allow for more flexibility in configuration.

Trade-offs – Managed Cloud Event Streaming Architecture:

Benefits:

- Managed cloud services help SMBs adopt Apache Kafka event streaming architecture on an organizational level more quickly, affordably, and efficiently for engineering teams without the risk of building in-house.

- Invest in programming custom software solutions for event stream messaging using Kafka APIs rather than spend to staff and support 24/7 data center operation teams.

- Adopt industry best practices and enterprise security on managed event streaming platforms like Keen with persistent storage and real-time analytics for your data.

Drawbacks:

- For teams with sufficient IT resources, building custom data infrastructure often makes more sense financially rather than paying a recurring cost. Here’s a guide on evaluating purchase price vs. the total cost of ownership.

- It’s worth considering building custom infrastructure to support use cases with exceptionally high event volumes like multiple billions a day and rigorous performance requirements.

Many development teams prefer to adopt a DIY approach to Apache Kafka event streaming architecture because of the need for custom configuration of operations that cannot be accomplished on managed service platforms. DIY solutions for Apache Kafka event streaming can be built on public or private cloud hardware. The use of elastic multi-cluster VMs allows hardware to scale to meet variable traffic demands but must be configured in advance. Additionally, an advantage of the open source development community and the Apache Software Foundation is that programmers from thousands of different companies work together on the platform with a goal towards vendor-agnostic interoperability.

Trade-offs – DIY Apache Kafka Event Streaming Architecture:

Benefits:

- DIY solutions for Apache Kafka event streaming architecture can be managed on public, private, hybrid, or multi-cloud using VMware, OpenStack, and Kubernetes platforms.

- Business organizations can adopt tools like Apache Storm, Spark, Flink, and Beam to build custom AI/ML processing for web/mobile app or IoT integration requirements.

- Build support for high-performance web/mobile applications, IoT networks, enterprise logistics, and industrial manufacturing facilities with embedded real-time data analytics.

Drawbacks:

- DIY solutions carry a higher risk of growing costs and delayed timelines for smaller IT teams due to the complexity of implementing a usable solution and scope creep.

- DIY solutions require dedicated resources for deploying, scaling, logging, and monitoring which is not feasible for smaller IT departments.

Multi-Tenant vs. Dedicated

Apache Kafka can be deployed on both single-tenant and multi-tenant infrastructure. Deciding whether a multi-tenant or dedicated event streaming configuration is right for your organization largely depends on the size of the data processing requirements, the maximum implementation time, and the cost for network hardware resources.

Multi-tenant solutions allow SMBs to level the playing field with enterprise teams and begin to build with Apache Kafka event streaming affordably and quickly using a cloud solution that is pre-configured on remote hardware. On the other hand, Single-tenant architecture has a complete freedom for configuration of Apache Kafka network settings related to fiber-optic bandwidth and user permissions with a secure, on-premise solution. The configuration of high-performance compute and bandwidth support in single-tenant or dedicated cluster installations of Apache Kafka can be prohibitively expensive due to hiring engineers and contractors to deploy and maintain the solution. The implementation process can also be risky for small and inexperienced teams due to the scope of the undertaking.

Main Advantages of Adopting Multi-Tenant Event Streaming Architecture:

- Multi-tenant hardware is the most cost effective way to build support for Apache Kafka, enabling SMBs & startups to compete with enterprise use of real-time data analytics.

- Keen’s event streaming plans start at $149/month. Custom data center configurations are available fully-managed on a contract basis.

- Persistent storage of streaming event data from user devices in Apache Cassandra (or other DBs) enables real-time search with historical analysis at “big data” scale.

- Optimized network speeds and better data security are more attainable with access to the best practices of trained experts in Apache Kafka with years of experience in enterprise data center management.

While Multi-tenant Kafka services carry many advantages, there are some trade-offs to consider. Multi-tenant architectures implement per-broker limits on bandwidth and compute processing time for every tenant based on the maximum read/write capacity per broker. The bandwidth in multi-tenant architecture is worker-dependent with request quotas determining the max speed of throughput. This leads to processing queues and caching in high volume systems where performance can become throttled. Multi-tenant plans limit user bandwidth to preserve latency.

For growing organizations, a marginal increase in throughput may not be worth the increased risk, cost, and complexity, but for large enterprises with stringent performance requirements, a single-tenant solution is worth considering. The highest throughput and lowest latency can be achieved by adopting a single-tenant solution for event streaming where the bandwidth speeds can be elevated to provide for more data throughput in batch processing via the message queue. This helps high traffic business organizations avoid the need to cache data when server hardware is operating near limits. Single tenant operators need to provision their own hardware resources for multi-cluster elasticity or switch to elastic hybrid solutions.

Main Advantages of Adopting Single-Tenant Event Streaming Architecture:

- Single-tenant hardware can be configured for maximum performance optimization without the bandwidth and compute throttling required on multi-tenant infrastructure.

- Engineers can custom provision hardware at high or low CPU specifications according to requirements, add local AI/ML processing with GPU hardware or custom storage arrays.

- Business owners have the peace of mind due to guarded physical access without the ability of anyone not authorized or employed by the company to maintain the hardware.

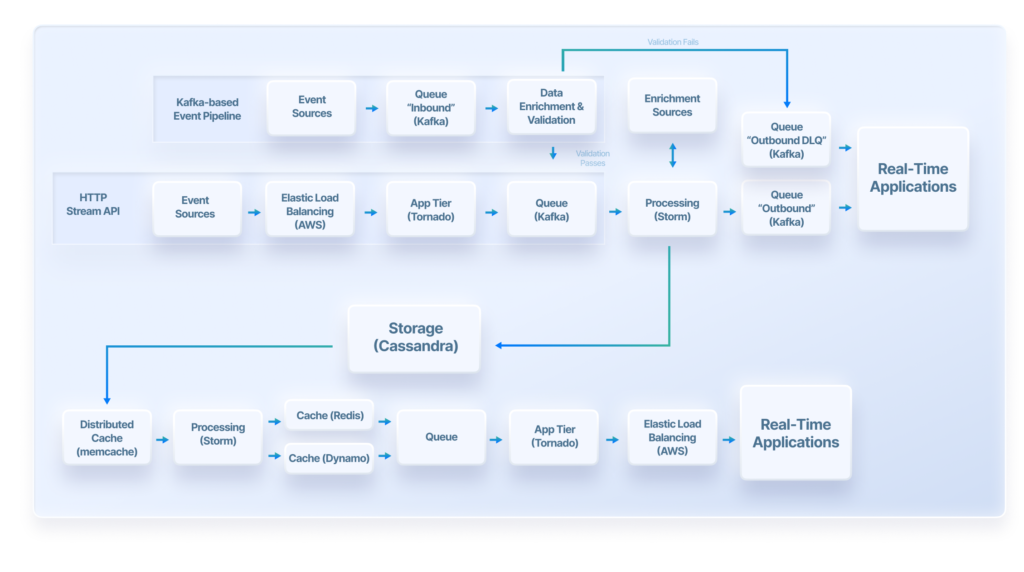

Keen’s platform offers multi-tenant, pre-configured data infrastructure as a service. AWS Elastic Load Balancing, Apache Kafka, and Storm handle billions of incoming post requests. Event data can be streamed to the platform from websites, mobile applications, cloud servers, proprietary data centers, Kafka data pipelines, or anywhere on the web via APIs. Events can be enriched with metadata for real-time semantic processing with AI/ML integration on product/content recommendations or for industrial requirements with IoT. Event data is stored by Keen in an Apache Cassandra database, queried via a REST API, and visualized using Keen’s Data Visualization Library.

Critical Components to an Event Framework: Storage and Analytics

Event streaming architecture is based on pub-sub messaging and event logs which record state changes on apps or devices via APIs. Complex event streaming architecture has two primary sources of input, streaming data from applications and events generated from batch processing. Applications generate real-time events from activity on web servers, network security monitors, advertising tracking, web/mobile applications, and IoT device sensors. Batch processes generate events from activity related to scheduled billing or other triggered processes by variable computation. All of this event data is recorded in persistent or cached storage depending on the architecture. Due to the high volume of event messages on popular websites, platforms and mobile applications, businesses must manage terabytes (TBs) or petabytes (PBs) of data for real-time search and user/network analytics processing at scale.

When evaluating the implementation of an event framework to manage these massive volumes of data, there are two methods of storage to keep in mind: transitory storage and persistent storage.

With transitory storage, data is only available on an event streaming platform for a limited time, whereafter it must be transferred to offsite facilities for warehousing like Azure Blob, Azure Data Lake, AWS S3, or Snowflake. This is an acceptable solution for applications that need to leverage event data in real-time and then perform historical analysis on transferred data at a later date. Challenges arise when a real-time search and data analytics solution is needed due to the division between recent and historical archives.

With persistent storage, systems keep data on the same platform for reference and access by SQL, APIs, or other query languages like CQL. Persistent storage for event streaming architecture is used to build historical context in real-time data analytics. Event logs provide much of the source information for “big data” archives generated by social networking platforms and ecommerce websites of the world’s largest brands. Event logs in Apache Kafka can be stored for access in a variety of database formats across SQL & NoSQL frameworks.

Simplified example of Kafka storing a log of records (messages) from the first message to present. Consumers fetch data starting from the specified offset in this log of records. (Source)

When implementing an event streaming framework, storage frameworks and the use cases they support are critical items to keep in mind. For traditional managed Kafka platforms, like Aiven.io and CloudKarafka, only transitory storage is provided as a part of the platform, and additional software like Apache Cassandra-as-a-Service may be required to achieve persistence. Complete event streaming solutions like Keen differ in that they offer persistent storage as a core component to their event streaming platform. In addition to native storage, real-time analytics are available via our Compute API and embeddable visualizations with our Data Visualization Library. In the next section, we’ll take a deeper dive into additional differences between event streaming solutions.

Which Event Streaming Solution is Right for You

Below are some high-level definitions of different event streaming implementation options:

Complete Event Streaming Platform (SMB and SME)

Keen is the all-in-one event streaming and analytics solution that offers users access to pre-configured big data infrastructure as a service. Data enrichment, persistent storage, real-time analytics, and embeddable data visualizations are included as part of the platform.

Complete Event Streaming Platform (SME and Enterprise)

Complete, feature-rich event streaming solutions that offer multi-tenant as a service model and a self-managed software platform that’s compatible with dedicated architecture and on-prem implementation. Enterprise event streaming platforms offer a wider array of services, support, and features, helping developers, operations teams, and data architects to build enterprise-grade event streaming solutions. Popular solutions include Confluent and Cloudera.

Managed Kafka-as-a-Service

Managed Kafka-as-a-service platforms handle deploying, maintaining, and updating Apache Kafka Infrastructure allowing teams to focus their IT resources on product development. Data infrastructure is configurable via a UI and is compatible with additional solutions for data storage like Apache Cassandra-as-a-Service. Popular solutions include: Aiven.io, InstaClustr, and CloudKarafka.

Do-It-Yourself

Custom configured event streaming architecture built from the ground up using open source and cloud-suite tools like Apache Kafka, Amazon Kinesis, GCP Dataflow, Azure Event Hubs, and more.

Event Streaming Options Compared

| Factor | Stream | Managed Kafka | Enterprise | DIY |

|---|---|---|---|---|

| Size of engineering team | Small, less than 5 engineers on data team | Small, less than 10 engineers on data team | Medium to Large, 10-100’s engineers on data team | Medium to Large, 10-100’s engineers on data team |

| Development time | Up and running in days to weeks | Up and running in weeks to months | Up and running in 6 months to 1 year | Up and running in 9 months to 2 years |

| Budget | Starting as low as $149/mo, scales with usage | Starting as low as $200/mo, scales with usage | Starting around $1,000 for standard plan (recommended for production) | Monthly salary of dedicated data engineers (~60k/month) |

| Performance requirements | Moderate throughput and latency requirements | Moderate throughput and latency requirements | Moderate to high throughput and moderate to low latency requirements | Moderate to high throughput and moderate to low latency requirements |

| Multi-Tenant, Dedicated, and On-Premise | Multi-Tenant | Dedicated | Multi-Tenant, Dedicated, and On-Premise | Multi-Tenant, Dedicated, and On-Premise |

| Fully-Managed Service vs. Self-Managed Software | Fully-Managed Service | Fully-Managed Service | Fully-Managed Service or Self-Managed Software | Self-Managed Software |

| Storage | Transitory and persistent storage | Transitory storage only | Transitory and persistent storage | Dependent on custom configuration |

| Native Analytics and Visualizations | Compute API with SDKs for querying streamed data | Additional open source, cloud suite, or SaaS software required | ksqlDB available as part of the Confluent platform for analytics and building event streaming applications | Additional open source, cloud suite, or SaaS software required |

| Data Enrichments | Available as part of the platform | Additional software required | Available as part of the platform via ksqlDB | Additional software required |

Conclusion

B2B SaaS and IoT companies need usable event streaming and analytics infrastructure to build differentiated, event-driven products. This big data infrastructure serves as the foundation for offering a wide array of functionality including real-time monitoring and alerting, building event-driven product experiences, and offering reactive user experiences.

In this guide, we covered:

- An overview of event streaming technologies

- Common use cases for SMB and enterprises

- Functional differences in the implementation of event streaming solutions and their trade offs

- Considerations for storage and analytics in an event streaming solution

- Software solutions to consider for Complete Event Streaming Solution, Managed Kafka, and DIY

As a general rule-of-thumb, a Complete Event Streaming Platform (SMB) like Keen or a Managed Kafka-as-a-Service solution is the better option for small B2B SaaS and IoT businesses (fewer than 100 employees and less than $50 million in yearly revenue as defined by Gartner). As your business’s employee count and yearly revenue generated grows, turning towards a Complete Event Streaming Platform (Enterprise) or building an in-house solution is often the better option.

Are you a small or midsize business interested in trying Keen’s all-in-one event streaming and analytics platform? Get started today by signing up for a free 30-day trial, no credit card required.