Modern businesses leverage event streaming architecture to capture and process massive volumes of data in real-time. To quantify massive volumes, data is currently being generated at an unprecedented rate of over 2,500,000,000,000,000,000 bytes a day. And by 2025, the amount of data in the world is expected to double every 12 hours. Companies that have a strategy to harness big data and use it to inform their business and power differentiated, event-driven products are coming out on top.

There are a number of implementation factors to consider when evaluating event streaming solutions: multi-tenant vs. dedicated infrastructure, fully-managed vs. do-it-yourself, and the requirements around storage and analytics. Understanding the tradeoffs between each of these options is crucial to selecting the best solution. In this guide, we will be taking a deeper look at multi-tenant vs. dedicated event streaming infrastructure with the goal of helping you determine which option is right for you. We cover more implementation considerations in our complete guide Event Streaming and Analytics: Everything a Dev Needs to Know.

Event Streaming Architecture -The Rise of Apache Kafka for SMBs

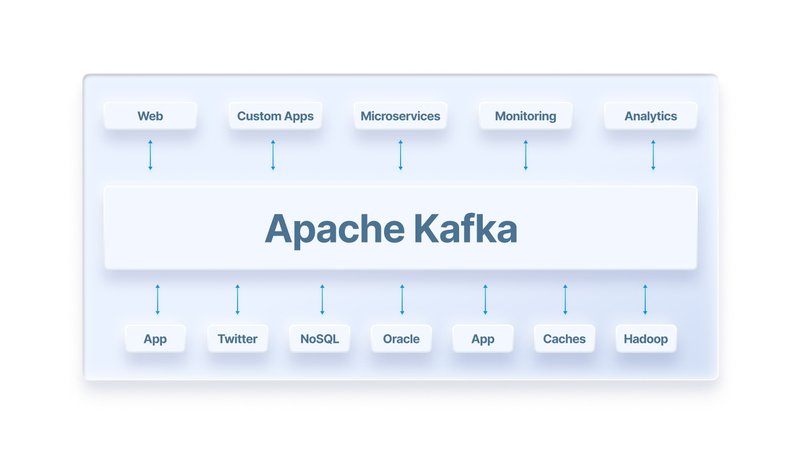

Event streaming architecture for real-time data capture and analytics emerged roughly a decade ago— bolstered by the release of Apache Kafka, an open-source distributed event streaming platform, in 2011. Apache Kafka serves as a foundational component of the data infrastructure of 80% of Fortune 100 companies. It offers a robust solution to support the high-traffic workloads of enterprise websites and mobile applications as well as their high-performance computing (HPC) requirements.

Keen Industry Survey (2017) – Hyper-Scale Data Centers & Apache Kafka:

- Netflix deployed Kafka in production at a scale of 500 billion events per day, with hundreds of data engineers, programmers, and security analysts on their support team.

- Facebook used Kafka to power Presto with Apache Hive, HBase, Scribe, and other tools to perform analytics on over one billion active users and 300+ petabytes in stored data.

- Airbnb Engineering adopted Kafka to support real-time analytics for 100 million users. Their team emphasized the importance of open source solutions and community.

- Pinterest used Apache Kafka streaming architecture to process over 10 billion page views per month using Storm, Hadoop, HBase, Redshift, & other “big data” tools.

Apache Kafka evolved out of the requirements for ETL (“Extract, Transform, & Load”) runtime performance and message broker queues for the cloud data batch processing requirements of enterprises. ETL tools are widely used to connect industry databases and data warehouse storage facilities with custom search functionality. Batch processing requires companies to move large amounts of data at high bandwidth in cloud runtimes or with on-prem control. Messaging applications track I/O connections in the cloud to show what is happening in real-time network traffic and platform activity. As real time and scale-related requirements evolved, Apache Kafka filled the need of programmers and DevOps engineers to build custom software apps with event streaming solutions that combine both ETL and messaging functionality into a unified “big data” platform in real time and at scale.

Fast-forwarding to today, Apache Kafka isn’t a tool exclusively leveraged by enterprises. It also offers small-to-midsize businesses (SMBs) better messaging, faster ETL, and scalable streaming data pipelines when compared to traditional request-response software. Data-driven organizations adopt Kafka architecture to build the foundation for event stream processing that enables the addition of real-time analytics capabilities in tandem with traditional historical analysis. Some examples of the types of streaming events tracked by Kafka are database changes, customer interactions, SaaS data, and microservice processes.

In 2021, SMBs choose Apache Kafka solutions to modernize legacy applications and databases from mainframe hardware to public cloud with support for microservices. With the benefits of horizontal scalability, process decoupling, and the facilitation of real-time processes, Kafka has served as a tool for upgrading both applications themselves, as well as the subsequent engineering processes across the board. Modern applications leverage stream processing for a wide array of use cases including creating reactive, personalized user experiences, deploying real-time analytics into their client-facing applications, monitoring users and applications performance, and more.

Comparison of Event-Driven Applications to Traditional Software:

- Traditional request-response software applications with monolithic stack requirements are deterministic with rigid requirements for coupling of the codebase and hardware.

- Event-driven applications are responsive, flexible, and extensible with isolated runtime support for microservices. They support loosely-coupled requirements for web servers.

- Event-driven applications run with cloud-native optimization for virtualization (VMs), distributed storage (AWS S3/Azure Blob), and containers (Docker/Kubernetes).

Multi-Tenant Event Streaming Architecture

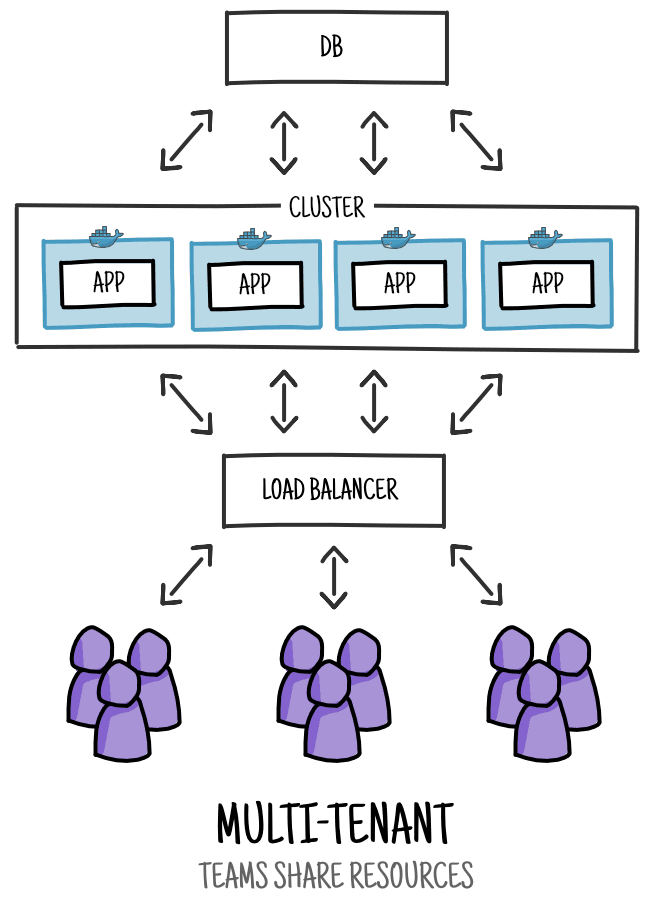

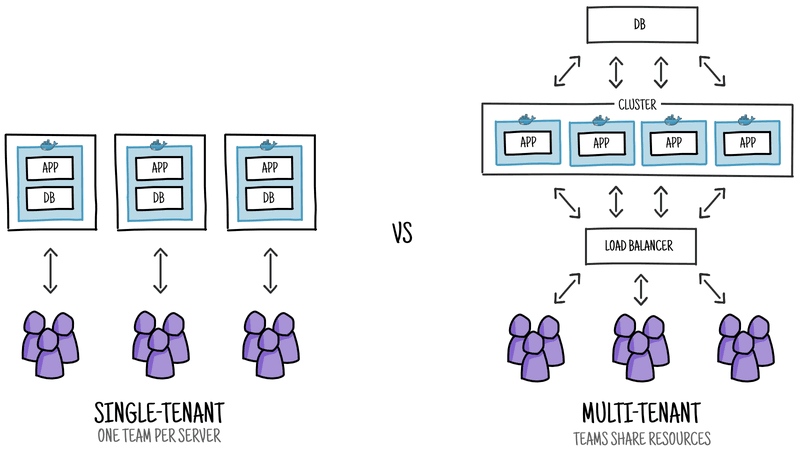

Apache Kafka can be deployed on both single-tenant and multi-tenant infrastructure. Deciding which event streaming configuration, multi-tenant or dedicated, is right for your organization largely depends on your scale and throughput requirements for data processing, the maximum implementation time available, and your budget for network hardware resources.

Multi-tenant architectures enable multiple customers of a cloud vendor to share the same computing resources as defined by Cloudflare. Despite sharing resources, data is kept completely separate and the user experience feels independent of others. This public cloud model has allowed SaaS, PaaS, and IaaS companies to thrive by offering more affordable and accessible solutions.

Companies follow the public cloud model for event streaming architecture by running dedicated Apache Kafka clusters on AWS, GCP, or Microsoft Azure hardware using “zero trust” security methods with TLS encryption on I/O connections and AES encryption on storage files. The managed cloud approach to multi-tenancy is especially beneficial for small-to-midsize businesses (SMBs) to save even more on data center hardware costs by sharing resources under established compute, storage, and bandwidth limits.

Small companies, lean startups, and consultancy firms with bootstrapped engineering teams often can’t afford the high cost or financial risk associated with developing and managing these technologies in-house. Even once they have been developed and deployed into production environments, the nature of streaming technologies implies ongoing maintenance, unexpected downtimes and incident events, along with periodic upgrades, additions, and updates. Contractors and integrators need a managed Apache Kafka solution to get up and running with programming teams to meet client contract requirements quickly, and without such inhibitory operational dependencies.

Managed cloud platforms are designed to ease the uptake burden for SMBs when implementing Apache Kafka services for real-time analytics and powering event-driven applications. Keen provides programming teams direct access to pre-configured event streaming infrastructure which includes data enrichments, pre-processing, persistent storage, along with real-time analytics and visualizations. Developers can also read from Keen’s Kafka clusters to build applications with event-driven architectures, extend their pipeline with custom real-time processing, and route events to downstream destinations.

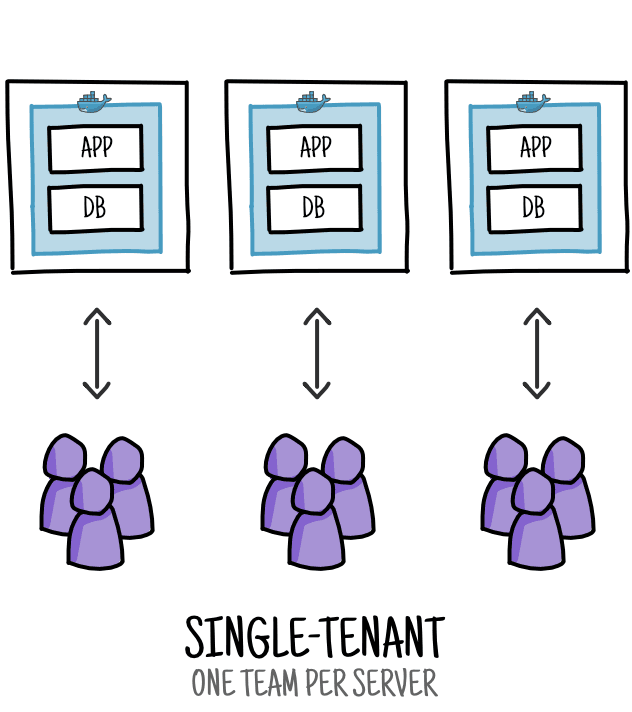

Single-Tenant Event Streaming Architecture

Single-tenant or dedicated event streaming architecture is configured in such a way that computing resources are reserved for only one business. This can be accomplished in a variety of ways such as private cloud, virtual private cloud, and on-premise. This model is often favored by enterprise organizations that have stringent throughput, security, and general performance requirements that justify the usually-prohibitive associated costs.

Reports from enterprise groups suggest that IT departments spend an average of around 2 years preparing to migrate to Apache Kafka, including initial staffing, facility engineering, purchasing, and new software application development. Enterprise data centers implement Apache Kafka in a wide variety of situations to support media publishing, ecommerce, industrial manufacturing, consumer analytics, IoT networks, etc. Enterprise groups operate Kafka clusters in public, private, hybrid, and multi-cloud architecture. Not all installations are single-tenant.

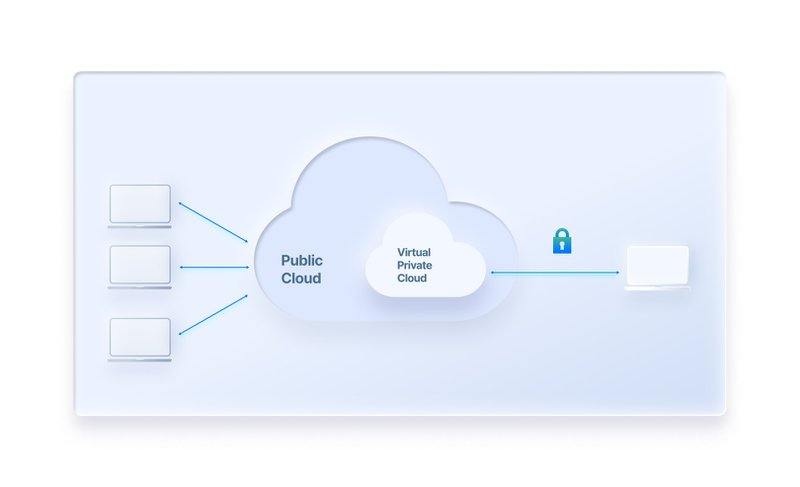

Single-tenant architecture is increasingly moving towards Virtual Private Cloud (VPC) networking as enterprise companies adopt SDDC standards. With VPC deployments, businesses install Apache Kafka event streaming architecture on dedicated multi-cluster hardware configurations. Overcapacity must be planned in advance and pre-provisioned. Otherwise, traffic spikes can be managed on-demand by elastic public cloud solutions but at a higher cost for resources. There are many private cloud solutions for dedicated hardware.

Dedicated Infrastructure – Enterprise Data Centers, Colocation, & Private Cloud:

- On-premise data center facilities operated by the world’s largest banks, manufacturing companies, media publishers, education groups, utilities, and government institutions

- Colocation center operations where fully-owned hardware is deployed to multiple data center locations worldwide for edge server, CDN, or data sovereignty requirements.

- Infrastructure-as-a-Service (IaaS) platforms that dedicate specific hardware for access by clients within secure data center facilities guarded by biometric entry precautions.

- Dedicated web servers offered by cloud hosting services where the rackmount unit will be wholly leased for use by a single customer under self-managed or BYOL plans.

- Private cloud hardware networked with virtualization under SDDC standards which is fully owned, operated and managed by an organization with local autonomy.

Many organizations will not accept any model other than an on-premise data center or private cloud as a secure facility because of physical access and remote management of hardware. Other groups operate with dedicated leased hardware from IaaS providers or cloud hosting companies as an equivalent by maintaining single tenancy on the specific equipment in a managed facility operated by the remote service contractor. Colocation is another hybrid use case scenario that combines elements of single and multi-tenancy depending on the facility.

Comparison of Multi-Tenant vs. Single-Tenant Architecture

Multi-tenant solutions allow SMBs to level the playing field with enterprise teams and begin building with Apache Kafka event streaming both affordably and quickly using a cloud solution that is pre-configured on remote hardware. On the other hand, Single-tenant architecture has complete freedom for configuration of Apache Kafka network settings related to fiber-optic bandwidth and user permissions with a secure, on-premise solution. The configuration of high-performance compute and bandwidth support in single-tenant or dedicated cluster installations of Apache Kafka can be prohibitively expensive due to hiring engineers and contractors to deploy and maintain the solution. The implementation process can also be risky for small and inexperienced teams due to the scope of the undertaking, along with concerns around migrations, SLAs, incidents, and updates.

Main Advantages of Adopting Multi-Tenant Event Streaming Architecture:

- Multi-tenant hardware is the most cost-effective way to build support for Apache Kafka, enabling SMBs & startups to compete with enterprise use of real-time data analytics.

- Keen’s event streaming plans start at $149/month. Custom data center configurations are available fully-managed on a contract basis.

- Persistent storage of streaming event data from user devices in Apache Cassandra (or other DBs) enables real-time search with historical analysis at ‘big data’ scale

- Access the best practices of trained experts in Apache Kafka with years of experience in enterprise data center management for optimized network speeds & better data security.

While Multi-tenant Kafka services carry many advantages, there are some trade-offs to consider. Multi-tenant architectures implement per-broker limits on bandwidth and compute processing time for every tenant based on the maximum read/write capacity per broker. The bandwidth in multi-tenant architecture is worker-dependent with request quotas determining the max speed of throughput. This leads to processing queues and caching in high volume systems where performance can become throttled. Multi-tenant plans limit user bandwidth to preserve latency.

For growing organizations, a marginal increase in throughput is rarely worth the increased risk, cost, and complexity, but for large enterprises with stringent performance requirements, a single-tenant solution is worth considering. The highest throughput and lowest latency can be achieved by adopting a single-tenant solution for event streaming where the bandwidth speeds can be elevated to provide for more data throughput in batch processing via the message queue. This helps high traffic business organizations avoid the need to cache data when server hardware is operating near limits. Single-tenant operators need to provision their own hardware resources for multi-cluster elasticity or switch to elastic hybrid solutions.

Main Advantages of Adopting Single-Tenant Event Streaming Architecture:

- Single-tenant hardware can be configured for maximum performance optimization without the bandwidth and compute throttling required on multi-tenant infrastructure.

- Engineers can custom provision hardware at high or low CPU specifications according to requirements, add local AI/ML processing with GPU hardware, or custom storage arrays.

- Business owners have the peace of mind of guarded physical access without the ability of anyone not authorized or employed by the company to maintain the hardware.

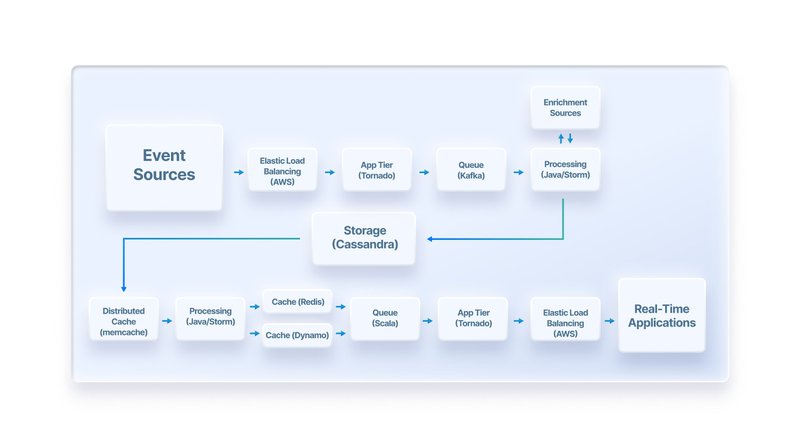

Keen’s platform offers multi-tenant, pre-configured data infrastructure as a service. AWS Elastic Load Balancing, Apache Kafka, and Storm handle billions of incoming post requests. Event data can be streamed to the platform from websites, mobile applications, cloud servers, proprietary data centers, Kafka data pipelines, or anywhere on the web via APIs. Events can be enriched with metadata for real-time semantic processing with AI/ML integration on product/content recommendations or for industrial requirements with IoT. Event data is stored by Keen in an Apache Cassandra database queried via a REST API and visualized using Keen’s Data Visualization Library.

Next steps: How to Choose an Event Streaming Solution?

There are a few common options to implement event streaming architectures powered by Kafka as defined below. Determining whether your organization needs multi-tenant or dedicated event streaming infrastructure is a crucial factor in narrowing down the best option for you. Additionally, we recommend evaluating whether a managed service or self-managed option is right for you. It is also essential to consider the storage and analytics needs of your event framework. For a handy comparison table, check out our complete guide Event Streaming and Analytics: Everything a Dev Needs to Know.

Buying a complete event streaming platform

Platforms like Keen with all-in-one functionality including streaming, persistent storage, and real-time analytics. Pre-configured data infrastructure is accessible via an API allowing developers to get up and running fast.

Purchasing a managed Kafka solution

Platforms like Aiven.io, Instaclustr, or CloudKarafka and extending it by building storage and analytics functionality. Other tools like Apache Cassandra-as-a-Service are required in order to achieve persistent storage and data analytics capabilities.

Building and managing event streaming architecture in-house

Developers can use open-source software like Apache Kafka or cloud suite software like Amazon Kinesis to build custom event streaming architecture. Event streaming architecture can be custom configured to meet rigorous requirements but requires development and maintenance resources.

Keen: Fully Managed Event Streaming Platform for the SMB

SMBs have the option to build event streaming architecture solutions for their IT operations internally using solutions like GCP Dataflow, AWS Kinesis, Microsoft Azure, or IBM Event Streams. Alternatively, they can consider using a managed cloud platform with pre-built big data infrastructure, like Stream, to make it easier to adopt event streaming architecture on an organizational level, as there is no need to worry about maintaining data center hardware. Managed cloud solutions for event streaming architecture lowers the overall costs to deploy, scale, centrally log and continually monitor resources with the trade-off of having recurring costs due to the subscription model. This solution makes particular sense for SMBs who have limited DevOps and engineering resources.

Keen’s event streaming and analytics platform is based on Apache Kafka with a Cassandra (NoSQL) database for persistent storage of event data. Programming teams can use Keen as a cloud resource for distributed hardware when building event-driven products and applications with real-time analytics. Keen offers a Stream API with SDKs, Kafka connectors, HTML snippets, and inbound webhooks to help developers seamlessly implement real-time, enriched event streams for their product or application. Applications can consume this event data directly from these streams in Stream. It is also possible to further integrate with ksql databases, Materialize, and other API services to build custom, event-driven applications. In addition to the streaming functionality, Keen offers unlimited storage, real-time analytics, and embeddable visualizations for streamed data.

Keen is more affordable, has lower implementation overhead, and a faster time-to-market for Agile programming teams to implement when using our SDKs compared to enterprise streaming platforms like Cloudera and Confluent. When compared to managed Kafka solutions such as Aiven.io or CloudKarafka, Keen offers the notable advantage of a complete event streaming platform with data enrichment, persistent storage, and usable analytics included—no need to build additional solutions on top of your managed Kafka infrastructure.

Interested in trying Keen’s all-in-one event streaming and analytics platform free for 30 days? Get started today by signing up for a free trial, no credit card required.