The widespread adoption of Apache Kafka event streaming architecture over the last decade in enterprise corporations and small-to-midsize (SMBs) has enabled the increased use of data science and machine learning technologies. Businesses spanning a wide array of use cases such as web publishing, social networking, industrial manufacturing, ecommerce, and many other B2B SaaS and IoT applications all significantly benefited from adopting technology that enabled them to capture the massive volumes of data they generate and act on it in real-time.

As streaming data infrastructure became ubiquitous across data-driven organizations, another trend emerged as well: event-driven applications. Event-driven applications are distinguished by the ability to deliver reactive, customized product experiences based on real-time event data. Common examples include personalization of content and displays, curated user experiences on cloud platforms, and flexible billing models based on custom, multi-dimensional metrics, like Events-Based billing.

With the rise of event-driven architectures, developers are more frequently facing the challenge of converting their existing legacy monolithic applications, along with deciding whether to develop a new product or application using an event-based framework. In this guide, we will:

- Discuss how event-driven architecture compares to monolithic frameworks

- Review the advantages of distributed hardware for horizontal scaling using API requests.

- Highlight prominent use cases of modern event-driven architectures

- Cover the key considerations when planning to implement an event-driven application

Monolithic vs. Distributed Architecture: Microservices

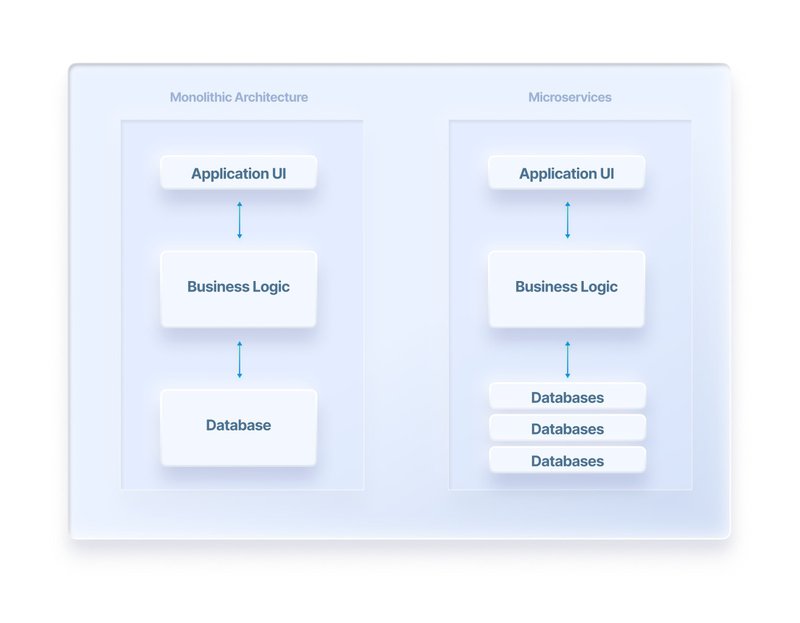

Monolithic architecture is designed on the model of a traditional mainframe computer or dedicated web server hardware with a vertical software stack that connects processing, RAM, database, I/O transfers, email, and file storage. For the deployment of software applications, monolithic architecture requires a tightly coupled runtime environment combining the BIOS, operating system, database framework, and programming language extension support. With monolithic architecture, the hardware configuration represents the limits of the software. There is no support for elastic scalability of hardware units, so the unit will fail if top capacity is exceeded.

Over the last 20 years, Monolithic architecture has been gradually displaced with distributed architecture operating on the fundamentals of a Software-Defined Data Center (SDDC). This migration was made possible due to major advancements in Software-Defined Networking (SDN) like the development of virtualization. Distributed architecture is based on virtual machines (VMs) and containers on servers that permit the sharing of resources in secure, isolated multi-tenant environments within hyperscale data center facilities. A key advantage of distributed architectures is that they support extensive use of APIs in processing data to reduce the strain on monolithic hardware through a horizontal stack approach. Additional benefits include loose coupling to underlying hardware through microservice support, fault tolerance, real-time data analytics, improved agility, and compliance automation. Despite the overall migration away from monolithic architecture, it is still commonly used across all sectors of industry for specific cases like software application runtime support and batch processing.

Differences Between Monolithic & Microservice Architecture:

- Microservices represent a modular or object-oriented approach to distributed architecture where VMs and containers are used to isolate code in different runtimes for parallel processing that is interconnected via SDN and APIs in custom software support.

- The breakdown of monolithic architecture into microservices allows for faster processing and greater scalability of hardware for high-traffic support requirements.

- The use of distributed architecture with microservices requires load balancing on network traffic, including container orchestration and multi-cloud resource management, adding multiple layers of complexity to operations vs. monolithic hardware stacks.

- Monolithic applications can be easier to develop, launch, and deploy, with greater runtime autonomy than systems based on microservices, with variable usage costs.

For the deployment of software, data center engineers need to manage specific components for user authorization, front-end design, business logic, database support, APIs, code processing, and email/SMS communication. The model of the traditional Apache web server has all of these elements combined in a vertical stack that is installed in layers with the software runtimes for web/mobile endpoint devices at the top. On the other hand, distributed systems utilize remote hardware that is disconnected from the code processing and database configured using SDN and APIs for application runtime support. This allows distributed systems to solve the problems of hardware failure due to peak overload by introducing elasticity to scale through synchronized VMs or containers in automated cloud multi-clusters. This can be further optimized by Infrastructure-as-code (IaC).

A critical type of distributed system are microservices characterized by componentization into units that are independently replaceable and upgradable. Fowler (2014) described the difference between the use of libraries in monolithic architecture and services through APIs in distributed architecture. Microservices are oriented around business requirements with smart endpoints, dumb tubes, and decentralized data management operations that are used for infrastructure automation. Whereas, monolithic architecture guarantees ACID transactions through relational databases that must be replaced by APIs using what NGINX calls “a partitioned, polyglot‑persistent architecture for data storage” with both SQL & NoSQL elements. Microservices have been crucial to enabling the development of event-driven applications. Event-driven applications, much like the name implies, respond in real-time based on a data-rich event or state change. Common examples include real-time user feedback, customization, and personalization of content, as well as sales analytics, network traffic metrics, and online payment processing.

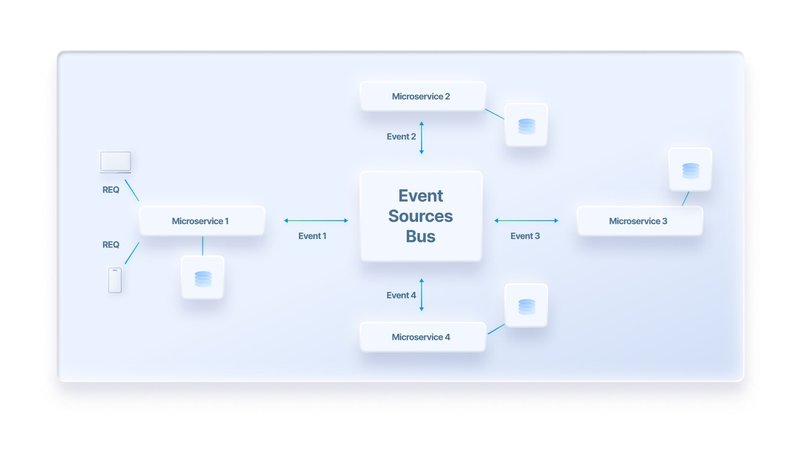

Event-Driven Architecture: Build Horizontal Scalability

Event-driven architecture is based on pub-sub messaging systems that communicate state changes from an application via an API. Each event is routed to different microservices for processing on distributed hardware rather than using monolithic construction methods of vertical stack deployment. This allows for software development teams to build complex functionality such as software systems automation and real-time analytics for websites, mobile applications, intranet, and IoT with events as triggers. After receiving an event trigger, APIs return the information from distributed hardware back to the app via other microservices. Additionally, event buses are used to connect SaaS processing for applications in runtime support with rules and filters, and event topics are used to create message queues in high-performance requirements at scale where millions of events per second may be generated by the traffic of a website or mobile application.

Take eCommerce as an example application. State changes or actions are communicated by events across distinct services such as checkout, shipping, logistics, and customer communication. Then, as these downstream services consume events, they execute their relevant function in order to orchestrate the underlying yet distinct processes. For example, a single purchase event could trigger a credit card charge, initialize the shipping process, add points to a rewards program, send a personalized email with a discount, and update a real-time model on consumer behavior and product segmentation. Such an architecture allows product and engineering teams to bring products and improvements to market faster without being crippled by a web of dependencies, along with accelerating their ability to identify and resolve bottlenecks.

Differences Between Monolithic & Event-Driven Architecture:

- Monolithic software applications use functions and classes to process data in a tightly-coupled relationship between the code, database, and web server runtime environment. Event-driven architecture is loosely-coupled with events and APIs.

- Event-driven architecture with Apache Kafka as MQ has improved fault-tolerance, loosened dependency between services, and simplified horizontal scalability compared to monolithic systems which will often fail at peak operations with potential data loss.

- Monolithic applications rely on message queues (MQs) for batch processing compared to the pub-sub message model of event-streaming architecture which allows many users to subscribe to events with a broker like Apache Bookkeeper managing connections.

Apache Kafka is used in event-driven architecture to queue this data efficiently at enterprise or high-performance computing (HPC) scale with no data loss in pub-sub message streaming across routing. Event data can be stored by Apache Kafka via Storm in a SQL/NoSQL database for further processing and analytics with microservices. Event-driven architecture can also leverage business metrics like clicks, search keywords, URL entry points, user browser, device type, etc. as source data for personalizing web/mobile app or IoT device displays. Additionally, operational metrics from networks can be used to improve security, conduct anti-fraud in transaction processing, or maintain anti-virus and intruder scanning on I/O traffic. The complexity of point-to-point data pipelines requires an MQ with central log. The development of Apache Kafka addressed this need, offered an attainable solution to developers, and significantly contributed to the rise of event-driven microservices.

To meet the demand for real-time, personalized product experiences, businesses are increasingly turning to event-driven web and mobile applications—no longer refusing to limit themselves with day-late, batch-processing. Real-time extract, transform, and load (ETL) across managed data pipelines is a key aspect of event streaming architecture for these teams. All-in-one event streaming platforms like Keen, allow developers to get up and running quickly by using fully managed, pre-configured ‘big data’ infrastructure. Keen’s event streaming platform offers a Stream API with SDKs, Kafka connectors, HTML snippets, or inbound webhooks to serve as the foundation for their event-driven applications. Then, applications can consume this event data directly from these streams in Keen. In addition to the streaming functionality, Keen also offers data enrichments, persistent storage, real-time analytics, and data visualizations for all data processed by the platform.

Migrating Monolithic Apps to Event-Driven Architectures

The migration towards event-driven architecture began with LinkedIn in 2011 as a means to manage the requirements of the world’s most popular social networking site. It continues today as more and more businesses seek to become data-driven organizations and adopt real-time software services and analytics. Enterprises and SMBs are increasingly modernizing their legacy monolithic applications by migrating towards an event-driven architecture. This is largely due to the benefits event-driven architectures carry including loose coupling to underlying hardware through microservice support, elastic or horizontal scale, fault tolerance, real-time data analytics, improved agility, and compliance automation. In addition, microservices are able to be compartmentalized modularly allowing developers to implement best practices related to object-oriented software design to avoid the problems associated with managing monolithic application codebases over time.

Monolithic applications are increasingly being converted to APIs due to better performance and to simplify many database problems for websites and mobile applications at scale. Best practice for migrating an application is by incrementally refactoring, rather than a top to bottom rewrite. NGINIX describes the process as:

“Instead of a Big Bang rewrite, you should incrementally refactor your monolithic application. You gradually build a new application consisting of microservices, and run it in conjunction with your monolithic application. Over time, the amount of functionality implemented by the monolithic application shrinks until either it disappears entirely or it becomes just another microservice.” – Overview of Refactoring to Microservices (NGINX, 2016)

Event-Driven Applications: Prominent Use Cases

As businesses have migrated towards event-driven architectures, many existing use cases have used the newfound ability to react to events in near real-time to improve their solutions to better address the challenges faced by their users. Disruptive, new use cases like DXPs and personalization engines have also emerged. Below, we cover example use cases that have greatly benefited from leveraging distributed, event-driven architectures.

- Pay-as-you-go billing models that charge users just for what they use, or per-event, were popularized by the giants like AWS, Twilio, and Datadog. Chargify, the leading billing solution for B2B SaaS, packaged this functionality into their Events-Based Billing product, offering their users an out-of-the-box solution for creating multi-dimensional flexible, event-based pricing models—disrupting the traditional, batch-processed metered billing model.

- Real-time monitoring and alerting solutions like Splunk and Datadog enable complex business organizations to respond quickly to new information and be aware of critical business challenges faster. No longer relying on slow, end-of-the-day batch data to uncover an issue.

- IoT organizations can manage their product line in real-time with software support on cloud hardware across a wide variety of applications. Whether it’s for home automation, connectivity data, asset tracking, or energy sensor readings, reacting in near real-time to an event is a critical component.

- Gartner’s Magic Quadrant for 2020 listed Adobe, Sitecore, Acquia, Liferay, Episerver, and Bloomreach as the industry leaders for Digital Experience Platforms (DXPs). Salesforce, SAP, & Oracle are the main challengers. The advantage of DXPs is that they allow businesses to build directly upon an organization’s event streaming data assets using secure APIs to offer real-time content and product recommendations to users. Gartner notes that “this technology can thrive in a digital business ecosystem via API-based integrations with adjacent technologies.”

- Marketing Technology (MarTech) is a broad category of software that developed from innovations in cloud data center management. Martech operates in support of “big data” at enterprise scale as a major component of both event streaming architecture and DXPs. Marketing technology broadly includes the statistical data analytics software used for advertising campaigns, web promotions, email newsletter management, sales automation, ecommerce optimization, SEO, and B2B/B2C lead management based on data science.

- Personalization engines and Consumer Data Platforms (CDPs) are two of the most important aspects of marketing technology to consider when integrating with DXP operations. Gartner reported the MQ leaders in the personalization engine sector to be Dynamic Yield, Evergage, Monetate, Certona, Adobe, and Qubit. For consumer data platforms, G2 Research recognized Leadspace, Blueconic, Lytics, and Blueshift as exemplary for their use of AI in building personalization engines based on ML-trained algorithms. All of these startups support the “big data” requirements of enterprise groups and SMBs with API-driven data analytics plans.

Building Event-Driven Applications: Key Points for SMBs

When building an event-driven application, developers need to consider which event streaming solution to use. There are two main approaches most teams take, buy—using a managed cloud Platform-as-a-Service (PaaS) or Infrastructure-as-a-Service (IaaS) solution or build—using open-source software and cloud service provider products to create a DIY solution in-house. We cover this at a high level below, but for a deeper dive check out our comprehensive guide: Event Streaming and Analytics: Everything a Dev Needs to Know.

Buying a PaaS solution like Keen carries a number of advantages over building in-house especially for smaller teams. Time-to-implement is quick—taking as little as days—due to pre-built data infrastructure being accessible via an API. Keen also offers programmers SDKs that work with different programming languages to build event streaming functionality into existing apps. Data streams can also be implemented using HTML snippets, Kafka connectors, or inbound webhooks. For migrating legacy monolithic applications, Kafka Source Connectors can be used to transform monolithic applications into an API source for a Kafka-based event pipeline. Purchasing a PaaS or IaaS solution is also less risky than building usable streaming and analytics infrastructure internally, especially for teams with limited DevOps resources. Finally, when looking at the total cost of ownership for an event streaming solution, buying often wins out as the more affordable option for growing organizations.

Although buying an event streaming solution carries many advantages, building one yourself should be highly considered for larger enterprises with the necessary engineering resources. The implementation cost and overhead will be more significant up front, but having dedicated infrastructure with custom configuration of operations can be valuable as your organization scales. While multi-tenant, PaaS event streaming solutions may limit your performance by throttling in order to ensure that the overall experience for other users is not impacted, you can build bespoke infrastructure for your dedicated solution that scales with ease. The marginal impact to performance may not be worth the risk for SMB but for larger organizations, building custom infrastructure should certainly be considered.

Get Started for Free! Sign up for a 30 day free trial and get unlimited access to Keen’s event streaming and analytics platform, no credit card required.

Keen: Powering your Event-Driven Architecture

SMBs have the option to build event streaming architecture solutions for their IT operations internally using solutions like GCP Dataflow, AWS Kinesis, Microsoft Azure, or IBM Event Streams. Alternatively, they should also consider using a managed cloud platform with pre-built big data infrastructure, like Stream, to make it easier to adopt event streaming architecture on an organizational level, as there is no need to worry about maintaining data center hardware. Managed cloud solutions for event streaming architecture lower the overall costs to deploy, scale, centrally log, and continually monitor resources with the trade-off of having recurring costs due to the subscription model. This solution makes particular sense for SMBs who have limited DevOps and engineering resources.

Keen’s event streaming and analytics platform is based on Apache Kafka with a Cassandra (NoSQL) database for persistent storage of event data. Programming teams can use Keen as a cloud resource for distributed hardware when building event-driven products and applications with real-time analytics. Keen offers a Stream API with SDKs, Kafka connectors, HTML snippets, and inbound webhooks to help developers seamlessly implement real-time, enriched event streams for their product or application. Applications can consume this event data directly from these streams in Keen. It is also possible to further integrate with ksql databases, Materialize, and other API services to build custom, event-driven applications. In addition to the streaming functionality, Keen offers unlimited storage, real-time analytics, and embeddable visualizations for streamed data.

Keen is more affordable, has lower implementation overhead, and a faster time-to-market for Agile programming teams to implement compared to enterprise streaming platforms like Cloudera and Confluent. Against managed Kafka solutions such as Aiven.io or CloudKarafka, Keen offers the notable advantage of a complete event streaming platform with data enrichment, persistent storage, and usable analytics included—no need to build additional solutions on top of your managed Kafka infrastructure.

Learn more about Keen & Apache Kafka Event Streaming Architecture: Read the complete guide – Event Streaming and Analytics: Everything a Dev Needs to Know to learn more.